Dear followers,

I’m going to differentiate the origins of some common words used to describe different automatons and the ideal differences between them.

- Machine: an artificial system that completes a task (also mechanism)

- Automaton: used to describe an artificial system that functions by itself

- Drone: historically, a unskilled or replaceable worker. Used to describe machines that follow basic inputs, often still controlled by humans in some regard.

- Robot: used to describe an automated laborer, something that serves a purpose (also bot)

- Computer: used to describe a electronic processing system used to “compute” or solve problems based on inputs

- Android: etymologically “man-like” or “almost man” an automaton that looks like a man (also droid)

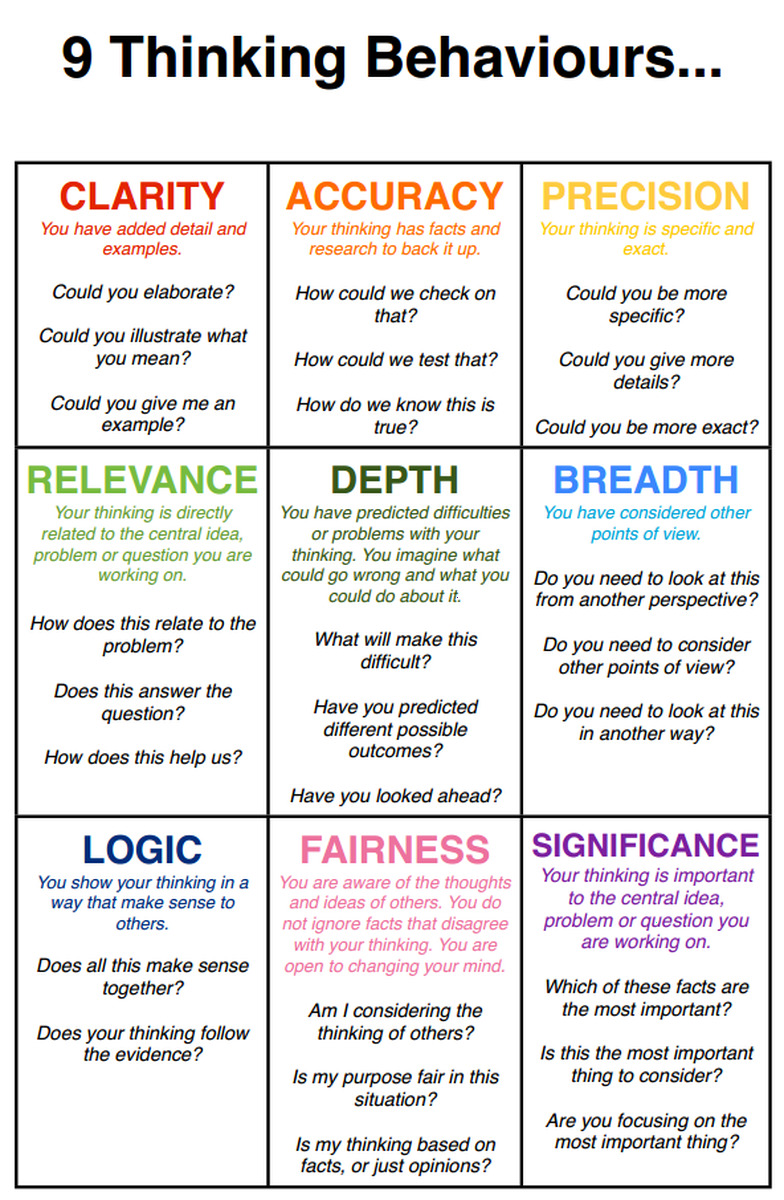

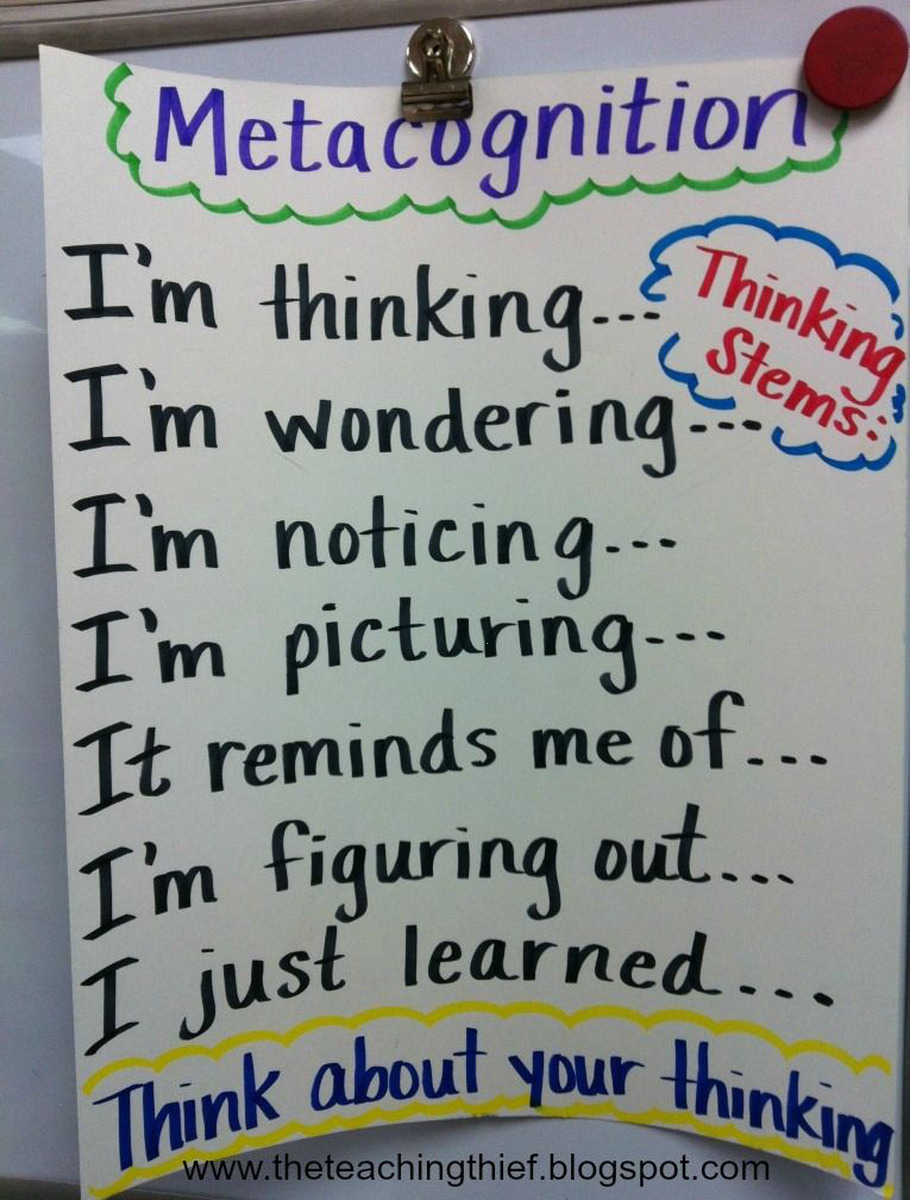

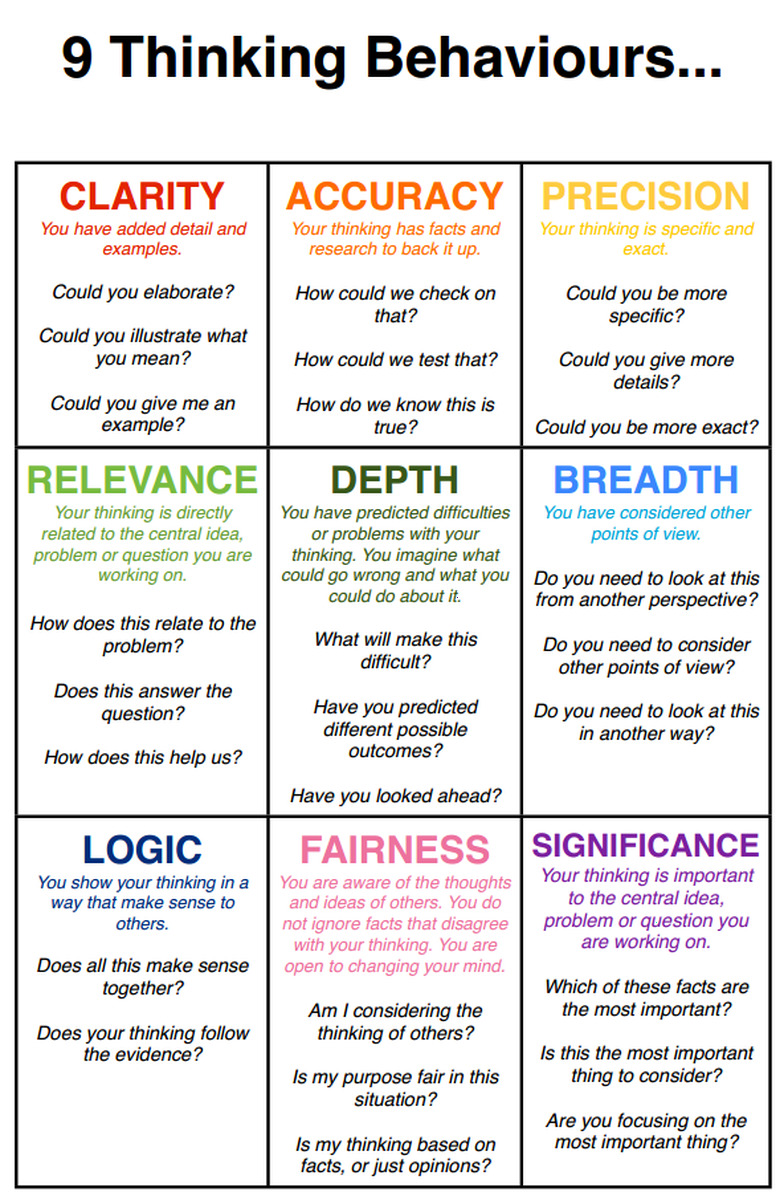

- Artificial intelligence: an automaton that shows sapient behavior and analyze its thinking process through metacognitivism (also AI)

However; language and terminology is fluid and dynamic. As our understanding of automated beings and artificial intelligence change so does the terms we use. There are plenty of automated beings nowadays called robots, but they are not laborers. That doesn’t nullify the fact that robot may be the best word we have to describe them. As a more specific example: is why we’ve seen a shift in calling non-player characters in games from CPU to AI.

Technology is changing and so is the terms we use. It may, or may not, be important to know the distinguishing features in the future.

Side note: I like the droideka from Star Wars. Always thought it was a cool design, but I also like the name because it stems from “android” but changes it so it no longer serves the same etymological function. It can be used to describe automated beings who have a corporal form that is non-human.